"Is the work you do "hacking," or is it science? Do you just try things until they work, or do you start with a theoretical insight?"

"It's very much an interplay between intuitive insights, theoretical modeling, practical implementations, empirical studies, and scientific analyses. The insight is creative thinking, the modeling is mathematics, the implementation is engineering and sheer hacking, the empirical study and the analysis are actual science. What I am most fond of are beautiful and simple theoretical ideas that can be translated into something that works"

– Yann Lecun

What triggered the Artificial Intelligence Revolution?

Cognitive science is a scientific discipline whose object is the understanding and simulation of the mechanisms of human thought, animal or artificial. Artificial intelligence is the set of theories used to implement applications capable of simulating intelligence in different contexts. It is a complex information processing system capable of learning from examples or experiences, thus conveying knowledge and replicating human problem-solving.

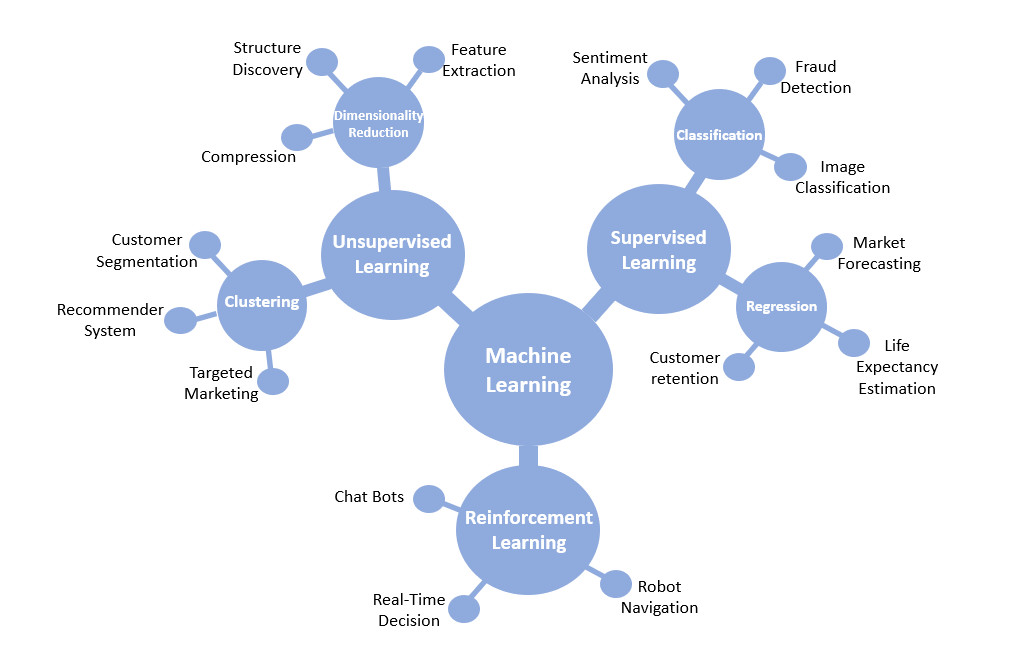

Among the fields of study of artificial intelligence, we find statistical learning or machine learning (ML), it covers the analysis, design, and implementation of algorithms allowing to learn from a data set called "training set" to perform a specific task.

Machine learning, as used in Today's applications, can be argued to be an art in itself. One we must first understand that the application of such techniques depends profoundly on the problem at hand and thus the decision about the model architecture, the methods that should be used and how estimation should be performed depends on the experience of the data scientist implementing the solution. The success of a given application and how efficient a problem is solved depends heavily on the skills and experience of the person implementing it. This is even more so since the success of Deep Learning.

Indeed, the past decade has seen several impressive breakthroughs in the field of artificial intelligence, due especially, to the recent advances in Deep Learning; a subfield of machine learning. In this article, we will discuss some of the most successful and well-known applications of Deep Learning and the context behind their success. In order to do so, we will need to discuss the concept of artificial neural networks as they are at the heart of the artificial intelligence revolution brought about by Deep Learning.

Every machine learning algorithm falls into one of the following two categories: Supervised Learning or Unsupervised Learning (a third category would be Reinforcement Learning but such a categorization will be left for a future discussion). Supervised Learning consists of predicting one or several outputs based on a labeled training set. As for Unsupervised Learning, it is a matter of bringing together bodies with common features, a useful approach to identify trends in the data or to identify common themes in documents. In this context, there is a family of models that use the concept of artificial neural networks to perform supervised and unsupervised tasks.

An artificial neural network is a collection of connected nodes (called artificial neurons) that mimic the structure of human brain where a vast network of interconnected units that are behind the way we process information.

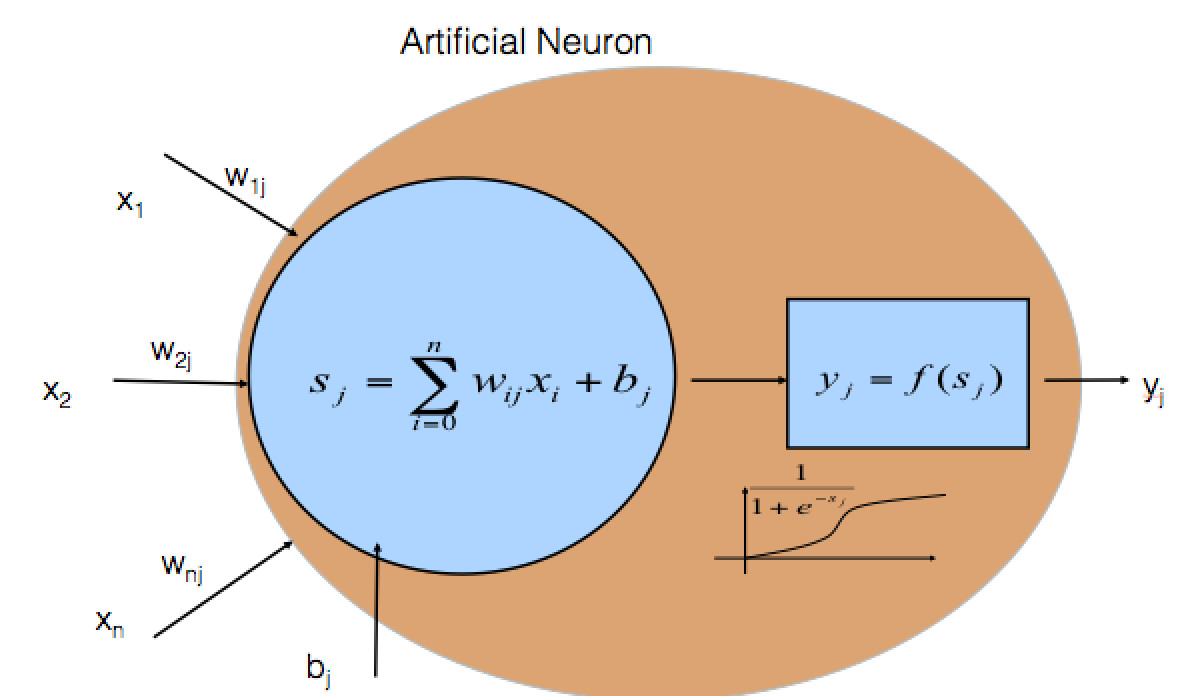

Figure 1: An example of an artificial neuron

A single artificial neuron (Figure 1) receives an input (data) that then is transformed according to a very simple linear function (think of a statistical regression), then smoothed out through an activation function creating an output. Under this scheme, a single neuron can be thought as reproducing a simple linear regression model and an artificial neural network is a structure is then a more complex and flexible model that stacks regression upon regressions.

What is Deep Learning?

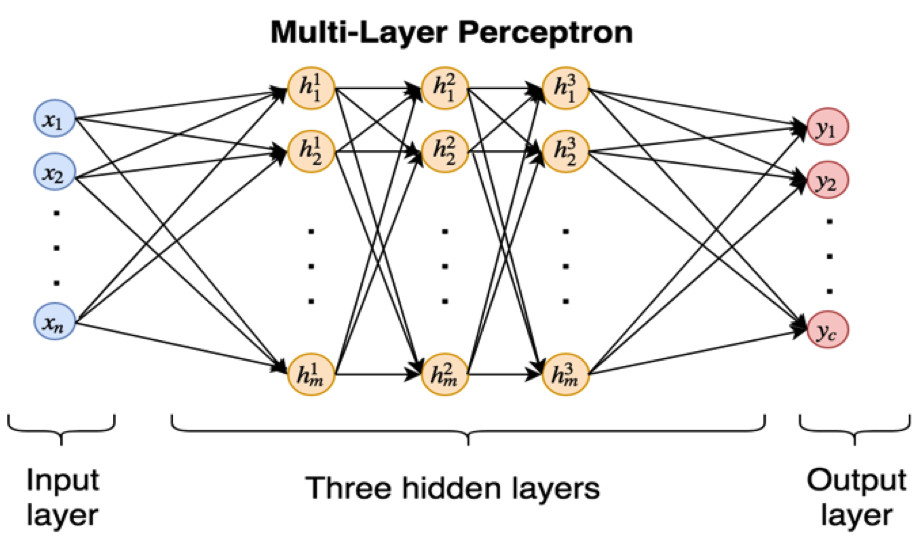

Artificial neural network structures can have many interconnected layers of neurons. The more layers, the deeper the network is (see Figure 2). The notion of depth of a network of neurons implies the presence of layers arranged in such a way that neurons receive information from neurons in the previous layer until it reaches the last layer where it predicts an output.

Figure 2: An example of a multi-layer perceptron

The originality of all this is that the results of the first layer of neurons will serve as input to the calculation of the following. This layered operation is what is called deep learning. The more layers and neurons the higher the number of parameters of the model and hence the more complex the task of training the model to reproduce or explain a certain phenomenon. Deep learning uses several non-linear layers to learn from data the representation of generally complex functions and this is achieved via a collection of methods and techniques that make the calibration of a high number of parameters computationally possible.

The intuition behind the fact that deep learning works so well is that you could see it has being a complex network of combined (non-linear) regression where one neuron is actually a simple logistic regression (or some variation of it) – since regression works so well it is not unreasonable to think that a combination of regressions would work even better!

Such structures have been around in the scientific literature for several decades however it is not until recent developments, both technological and theoretical, that allows us now to train very deep networks capable of performing at human-like accuracy at very complex tasks such as image and text recognition.

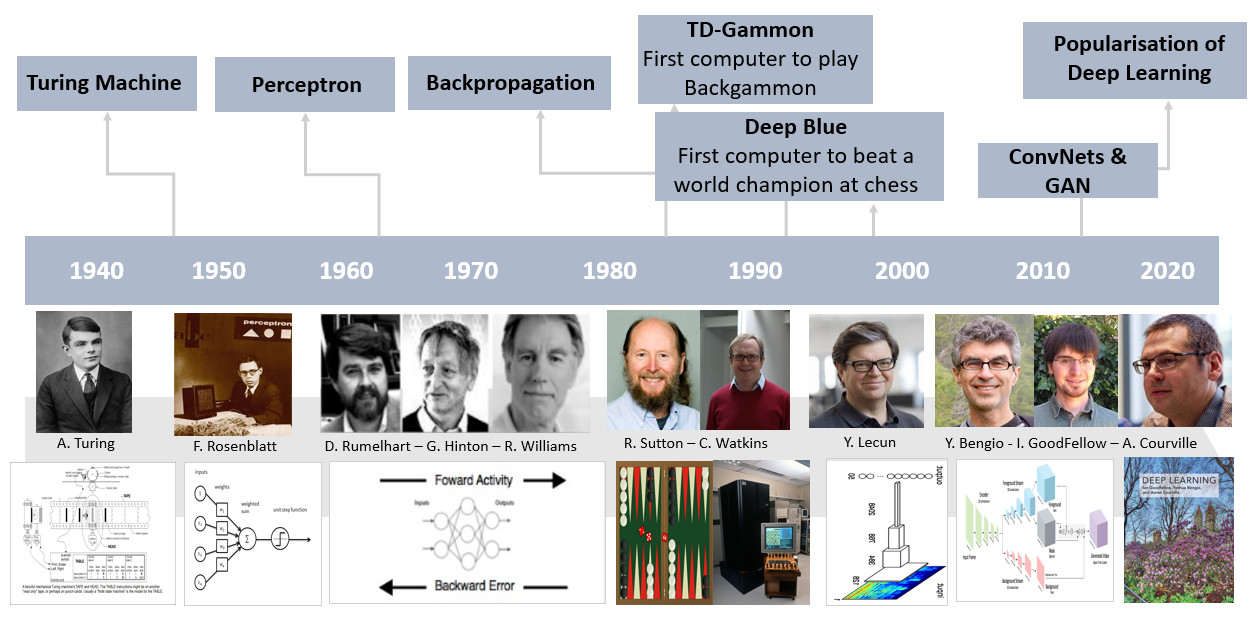

A brief history and the background of Deep-Learning

Machine learning has evolved through the years going through several phases starting in the early 90's. From 1995-2005, the focus was on natural language as well as research. During this period, SVMs and logistic regressions were more used (due to their performance) than neural networks. Then there was a come-back to neural networks that started in the 80's. The concept of deep neural networks goes back at least as far as 1980 with the introduction of the first multi-layer artificial neural networks called the multilayer perceptron.

In the early 2000s, some researchers focused on neural networks, and more specifically on deep neural networks. With the lack of data and the limited performance of computers, this technology did not perform to its full potential at the time. Nonetheless, a few researchers continue working in this direction, a few names we can mention are Yoshua Bengio and Yan Lecun who are the forerunners of deep learning alongside Geoffrey Hinton.

In the 80's, a breakthrough was achieved and for the first time, a deep neural network was trained and capable of performing at unprecedented accuracies in tasks such as image recognition. Ever since different architectures have been put forward and trained with a variety of tasks in mind.

The announcement of the 2012 ImageNet challenge results is the trigger of the breakthrough of the deep learning. Geoffrey Hinton, Ilya Sutskever, and Alex Krizhevsky from the University of Toronto submitted a deep convolutional neural network architecture called AlexNet. For the first time, a deep neural network was trained and capable of performing at unprecedented accuracies in tasks such as image recognition. Ever since different architectures have been put forward and trained with a variety of tasks in mind.

Figure 3: Timeline of scalable machine learning

There is an interesting analogy between deep learning architectures and the way our brain functions. In brain image processing, for instance, neuroscience seems to have found an indication that image recognition is made through a multi-step procedure where simple tasks are performed then combined or stacked in order to finally to produce an understanding of a given image. In deep learning, these simple tasks are performed by layers in the architecture that learn to recognize simple features before assembling all the information into a final output. This layered architecture is found across most instances of human behavior, generally one tends to learn to perform tasks in increasing order of complexity. We start by learning basic assignments to finally conceptualize them. This is the technology behind the boom of artificial intelligence that we are living now where algorithms are being trained to do very complex tasks at the human-like level of performance. Image recognition, text understanding, voice recognition, automatic translation, and so on. This revolution is rapidly being integrated in our daily life thanks to the vision of a handful of private players (Google, Apple, Facebook, and Amazon) and to a growing start-up ecosystem that uses this technology to disrupt and bring innovation to all fields of application like the Chinese Baidu, Alibaba and Tencent; and more recently the Montreal-based startup Element AI. At the origin of all this, there are the visionary scientists who continued working in this area despite the challenges and initial failures.

Behind this revolution, there is a solid scientific foundation and mathematics, statistics and computer science at the heart of the techniques and methods of what is commonly referred to as artificial intelligence. In order to implement and seemingly integrate this technology into existing processes in industry, a new breed of professionals and technicians is needed. One with solid foundations in mathematics, a deep understanding of the theory and practice behind the models and highly skilled the computer implementation of such architectures.

Machine Learning Applications

In this context of great enthusiasm for everything AI-related, there is an unprecedented amount of economic activity that is being created around this scientific field (see Figure 4). The financial and insurance sector is no exception and it is in the process of integrating these new technologies as well. Machine learning is expected to have a profound impact on all the structures of the financial and the insurance industry. Within a few years, changes will be brought about by this technology at all levels of financial activities such as trading, risk analysis and IT, risk management and credit granting as well as portfolio management. Any instance where decisions have to be made based on a human understanding of a particular situation or environment can be potentially automatized. In the insurance industry, machine learning will improve existing relationships with customers and sales agents, and finding ways to turn data into business value to drive profitable growth.

These changes are not only driven by the recent availability of the mathematical and computational technology but also by the rapid evolution of technology in other fields that allow for an impressive amount of data collection and that is changing the environment and way in which business and daily lives are conducted. Take, for instance, energy consumption and production. The declining costs of solar and wind technologies led to increasing interest and opportunities for renewable energy systems. Smart grid technology is the key to an efficient use of distributed energy resources. The complexity and heterogeneity of the smart grid (electric vehicles, smart meters, intermittent renewable energy, smart buildings, etc.) and the high volume of information to be processed created a natural need for artificial intelligence techniques that could make sense and exploit this new environment in an efficient way.

We now discuss in more detail some of the instances and processes in finance and insurance where machine learning can be successfully applied and called to be a game changer.

Figure 4: Machine learning methods and its applications

Here are six key applications of artificial intelligence in the financial and insurance industry and healthcare:

1. Assessing risk

Loan and insurance underwriters have relied on limited information provided on applications to assess the risk exposure of their customers. There is an unprecedented amount of personalized data and metrics that are being harvested and stored through traditional means as well as via connected intelligent applications and systems that are now part of daily life. This information has the potential to draw a portrait of a given individual behavior in most relevant aspects of life. What we eat, we buy, places we go to, levels of physical activity, people we meet, health metrics, driving behavior, and habits, etc. are now information that is potentially available along more traditional and readily available characteristics such as age, gender employment, credit score, driving record, medical history, to name a few. Machine learning can play a key role to adjust existing models by including all these metrics.

2. Healthcare

With the increasing cost of healthcare and the availability of the detailed individualized clinical, demographic and behavioral data; machine learning is finding its way into applications seeking to automatize healthcare processes ranging from diagnostics to prevention medicine. Patient evaluation procedures, such as assessing the likelihood that a patient will be readmitted to a hospital after treatment, can benefit from machine learning enhanced decision-making algorithms that will optimize the number of physicians and nurses involved in a given file while maintaining the quality of service. With machine learning algorithms, we can examine scans and images faster and with greater accuracy before benign tumors become malignant. These AI assistants reduce healthcare time of service and costs since screenings require so much time from doctors and technical personnel.

3. Anomaly detection

Traditional rule-based models approach of identifying anomalies or rare events by responding to alerts based on static thresholds are not suited to Today's dynamic environment where the amount of data available for a single profile is as large as diverse. For a fraud detection purpose, most of the crucial information is unstructured data, which are hard to analyze and thus they are rarely taken into account. This is where deep learning can find an application and play a key role in transforming the business of fraud detection by using unstructured data to provide valuable insight thus enhancing the accuracy of our solution while reducing false alerts triggered by statics thresholds.

4. Natural Language Processing

The state-of-the-art in Natural Language Processing (NLP) algorithms have attained levels of human-like performance. Automatic translation, sentiment analysis, and text classification are some examples of this technology. Applications of this technology are the development of chat bots or question-answering systems capable of understanding complex textual commands and respond in a human-like fashion. Such algorithms, commonly called chatbots, are going to replace gradually the customer service agents, thus drastically improving how core operations are run and reduce their costs. We have to distinguish between Retrieval-Based (easier) and Generative (harder) models approach. The first model uses a repository of predefined responses and kind of heuristic to pick an appropriate response from a fixed set based on the input and the context. The second model generates a new response from scratch. Deep Learning techniques can be used for both models but research seems to be moving into the generative direction. Customer service and corporate management will be the first to benefit from these applications in the financial and insurance sector.

5. Digitization

Optical Character Recognition (OCR) deals with processing images and translating them into text. OCR has incredible cost-savings potential in high-volume highly-manual low value-added activities such as processing bills and invoices. Another application is pattern recognition, which is an automated process of identifying features in an image, enabling biometrics and signature recognition, amongst other things, and which has useful applications in fraud detection and security.

6. Image Recognition

Over the past five years, we have seen an important development in image recognition and classification, automatic translation, autonomous driving, and music generation. These advances were due the deep learning approaches. Learning from images to recognize faces, objects, situations and make queries about images is not only possible now but it is achieved with unprecedented precision. Identifying faces, objects, gender, number of elements or recognizing the breed of a dog on a photograph, are tasks that can now be performed using deep neural network architectures. In this context, image classification has a great potential to be introduced in insurance claim processing. There are now millions of classified images that constitute a solid knowledge base to develop insurance algorithms that take an image as input in the decision-making process. Fraudulent claims or simple claim assessment can be automatized via images thus stream-lining the claim filing process.

We must say that the choice of the picture below (see Picture 1) is not arbitrary. If we were in the 1990s, looking at picture 1, we would have probably thought of a science fiction photo. From now on, this dream has become a reality. Indeed, drones are already part of our ecosystem.

Machine learning (ML) integrated into drones will allow insurers to significantly reduce their cost. According to the Insurance Information Institute, fraudulent claims account for 10% of the losses in property and casualty insurance.

An extreme event for which an insurer receives a significant number of claims for damages that were caused prior to the event. Drones can be used to take pictures of insured houses periodically to protect insurers against fraudulent claims following a natural catastrophe or other extreme events, insurers are protecting themselves against this type of fraudulent claims. With machine learning, we are able to automatically process aerial images, evaluate the damage caused by hail and evaluate the extent of the damag

Will AI replace artists?

Picture 1: A drone delivery heading towards the city center

An interesting feature of deep learning structures is that just as they can be trained to recognize/classify elements (sound, image, text, etc.), they can also be trained to reproduce original elements that follow or resemble observed examples. This has produced interest applications where music, text, and paintings can be reproduced by AI algorithms thus giving the impression of witnessing art creation at work. Nonetheless, this remains an illusion since even-though we are able to produce algorithms that learn how to paint or write music like golden-age masters, this is just a statistical methodology at play. Now, more than ever the presence of an artist or a human element with the sensitivity and experience is essential to the application of new techniques for the common good.

Final Word

While we may be far from having true artificial intelligence the way we've seen it depicted in countless movies; that is sentient intelligence with self-awareness, there has been a dramatic technological progression in this science and this trend will surely continue. It is now part of the landscape and it is premating daily life in all its subtleties. Demand for services is evolving and will follow these trends in technology. Insurance and finance are not an exception and the sector must step up to the challenge and start equipping themselves with the tools and expertise needed to deliver the next generation of products. This will mean changing traditional approaches in risk management, Insurance, marketing, pricing, etc.

The possibilities are endless and the time is now to start moving in this direction. The rest of the economy is already on the move!

Subscribe to our free newsletter.

About Koïos Intelligence

Founded in 2017, Koïos Intelligence's mission is to empower the insurance and financial industry with the next generation of intelligent and customized systems that are supported by Artificial Intelligence, statistics and operational research. Combining the knowledge of our lead experts in Insurance, Finance and Artificial Intelligence, Koïos is developing new technologies that redefine the interactions between insurers, brokers and customers.